An Evaluation Dataset and Strategy for Building Robust Multi-turn Response Selection Model

in Posts on Reviews, Nlp, Deep_learning, Retrieval, Dialogue, Response_selection, Adversarial_dataset

TL;DR:

- The previous neural response selection model lack of a comprehensive understanding of context, and it results into biased response selection.

- The adversarial dataset was reviewed and filtered by experts, and proposed to confirm that model has learned the comprehensive information, not just comparison based on similar tokens.

- The proposed debiasing strategy utilizing biased model seems effective to migitate the model’s biased pattern learning.

Introduction

- The author argues that there is a common limitation among neural-network based response selection model that it is hard to generalize well to adversarial setting.

- The most likely reason suggested is that the model learns superficial patterns, not a comprehensive understanding of context.

- The method to test the model’s contextualized understanding is required.

Adversarial dataset

- The author proposed 7 adversarial dataset consists of one correct response(gold) and one incorrect response(hard-negative), and the hard-negative sample of each dataset was created through rules aas following definitions and manipulation.

- Repetition: sampling one of the utterance in the context.

- Negation: removing or replacing negative words such as not, hardly, or few with positive words to test the model understand semantic reversal.

- Tense: revmoing tense morephemes or expressions.

- Subject-Object: replacing subject with object word or vice-versa, in order to examin whether the model fully understand the context disconnection caused by replacement.

- Lexical Contradiction: replacing words with other word with reversal semantic meaning, such as hot and cold.

- Interrogative Word: creating questions in a form of 5W1H to ask for information that has been shared in previous context explicitly or implicitly.

- Topic: replacing key sentence or vocabulary with another sentence or term in context, and the replaced sentence or term does not have to be reversal semantic expressions.

Debiasing strategy

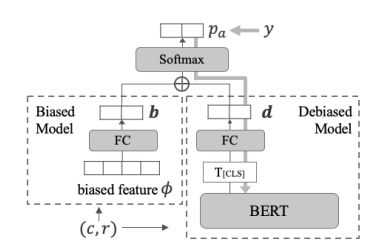

- Biased model: Single fully-connected layer with softmax activation, which is fed list of jaccard similarilty between context and response, or last utterance and reponse in both morph-level and wordpiece-level as inputs.

- Debiased model: BERT-based classifier, a cross-encoder architecture

- How to compose?

- the final probs for classification is the summation of softmax output biased model and debaised model after passing softmax layer again.

Experiments Result

- Debiasing strategy on baseline model, BERT, improves performance on 6 out of 7 adversarial dataset.

- Debiasing strategy show more performance gain on UMS-BERT, which indicates it have a synergy with auxilary tasks helping model learn contextualized information.

Conclusion

- The proposed adversarial dataset can be utilized to examin whether model fully understand the comprehensive context, and debiasing strategy can be effective to migitate the biased pattern learning.

Reference and Implementation:

- paper: An Evaluation Dataset and Strategy for Building Robust Multi-turn Response Selection Model, 10 Sep 2021